Angels in the Architecture, pt IV

On the limits of 3M's Visual Attention Software in architectural research

[This is a the fourth in a series of long posts with a lot of images. If you’d like to go back to the beginning you can do so here. Consider clicking over to the Substack site if the images won’t load fully in your inbox.]

Hello readers. Sorry it’s been a while—I’ve been busy!

This is the penultimate post in a series I started last March. Here’s a quick rundown of the earlier installments:

Part I (Introducing 3M’s VAS software; early experiments)

Part II (Further experiments and the search for “objective” ratings)

Interlude I (Competitors to 3M VAS)

Interlude II (Comparisons between different visual attention outputs)

Part III (Experiments in interpreting visual attention outputs)

One theme I’ve encountered time and again in preparing this series is the slippery nature of interpreting VAS heatmaps (and indeed eye-tracking results more generally). Most articles offer competing definitions, albeit with some overlap, of what a heatmap of a “well-designed” or “engaging” building looks like. Eye-tracking simulation is a relatively new field, and it’s no big surprise that there’s no readymade manual or established rubric for heatmap interpretation. Still, if this technology has any long-term potential we should be able to observe some sort of correlations and make basic predictions.

But can we?

The 2020 NCAS Poll

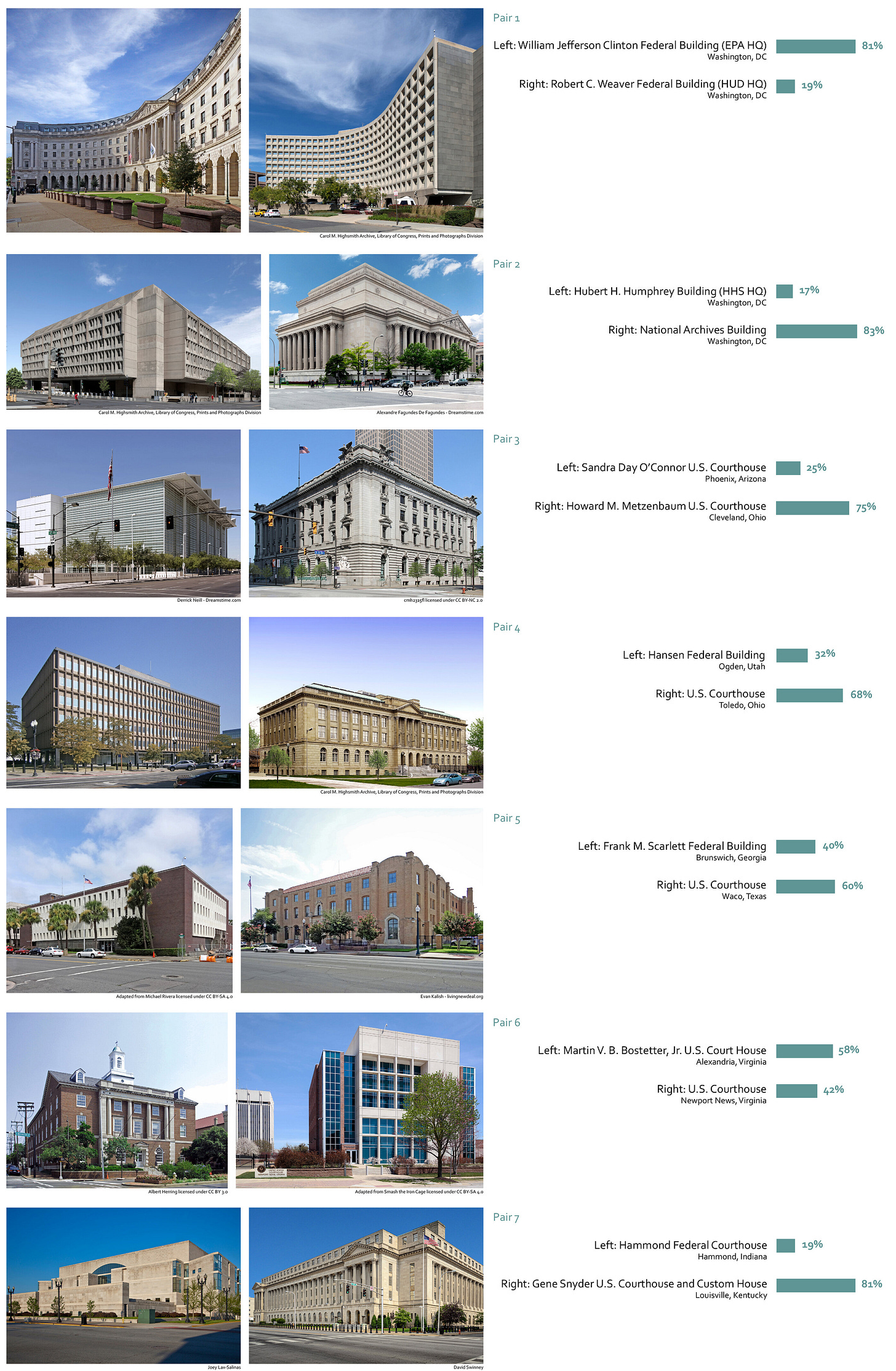

In August of 2020, the National Civic Art Society paid the Harris Poll to survey 2,039 adults in the United States about courthouses and federal buildings. The poll took on a structure that I’ve jokingly referred to as “Two Cool Buildings,” wherein two static photographs of building exteriors are set side by side and the audience is encouraged to select the “better” building from the two on offer.

The exact survey question and methodology is worth quoting at length, if only because so many people online misrepresent what the poll actually measured.

THE SURVEY QUESTION

The survey comprised seven pairs of images of existing U.S. courthouses and federal office buildings. The buildings were not identified in any way. Each pair comprised one building in a traditional style and one building in a modern style. The order in each pair was randomized, as was the order of the questions. For each pair, the survey question was: “Which of these two buildings would you prefer for a U.S. courthouse or federal office building?”

IMAGE SELECTION

From a long list of many dozens of photos, the seven pairs of images were very carefully selected and edited to ensure fair comparisons. Factors such as sky color, angle of photo, light conditions, distance from building, weather conditions, nature of foreground, nature and quality of street furniture, presence of street trees, parked cars, and passing people were all controlled for either perfectly (e.g., sky color) or as far as possible via careful photo selection and editing. In all cases the aim was to reduce to a minimum factors other than the building itself that might influence people’s preferences. In addition, the use of a range of seven pairs of photos rather than just one comparison permits confident conclusions to be drawn.

In controlling for variations between photos, this level of rigor is refreshing, but it masks a deeper issue: looking at two photographs without any additional context is a bad way to make decisions about the built environment.1

But these carefully chosen exterior photographs are a good subject matter for the sorts of VAS comparisons I’ve been looking at, so let’s dive in.

As the figures above demonstrate, a majority of adults surveyed prefer U.S. courthouses or federal office buildings in more traditional styles. We can also get a sense of the magnitude of this preference: consider the 58/42 split in Pair 6 versus the 83/17 divide for Pair 2.

All of which is to say, this poll gives us an excellent foundation for testing some of the claims around eye-tracking and VAS.

In a 2022 blog post at The Genetics Of Design, Ann Sussman and Hernan Rosas shared the results of an eye-tracking study using the same images from the NCAS poll above. 62 human participants looked at paired images on a screen while their eye movements were recorded by iMotions Online, a browser-based eye-tracking tool.

Per Sussman and Rosas:

“Eye-tracking data is collected and aggregated to form heatmaps which glow reddest where people look most, and fade to yellow, then green, and finally, no color at all, in areas ignored…And that was the remarkable, and remarkably consistent, finding this eye-tracking pilot-study revealed; no matter where the buildings were in the U.S., traditional civic architecture consistently drew viewer attention and focus while modern-style counterparts did not.”

Let’s see what those heatmaps look like:

It’s certainly the case that in some of the instances above, the red hotspots are more prominent on the images of the traditional buildings. But there are more than a few hotspots on the contemporary designs, too. The seventh and final pairing seems to be more or less a toss-up. The pairs with the strongest discrepancy in the original NCAS polling don’t necessarily show the strongest disparities in hotspot placement. If there’s a meaningful correlation between these heatmaps and the results of the NCAS survey, I can’t see it.

But my real interest here is in how this translates to VAS. 3M, the makers of the VAS tool, assert that “VAS predicts the first 3-5 seconds of eye gaze behavior (first-glance vision) with 92% accuracy.”2

To be fair to Sussman and Rosas, the heatmaps above are the result of a 12-second interval of viewing these paired images, so a direct comparison to VAS output won’t be fully apples-to-apples. But it still should tell us something. How closely does VAS analysis of these side-by-side images match up with the eye-tracking results from 62 human participants? Below I’ve added another column with each pair processed by VAS on the “Other” setting.

When I compare the center column to the rightmost, I don’t come away with the sense that both are giving me the same information. I looked in two earlier posts at some of the challenges of making comparisons across platforms, and all those same caveats hold here. Even so, I find it troubling for this body of research that the actual human-subject output from the iMotions study diverges so strongly from the VAS results. And this is the best-case scenario, where each image has been selected and edited with care to minimize the sorts of distortions and distractions that arise from busy clouds, foreground clutter, etc.

There’s another wrinkle to be unpacked here regarding how these heatmaps should be interpreted. The second post in this series looked at a paper by Nikos Salingaros and Alexandros Lavdas. In that paper—focused on VAS work, not actual eye-tracking like the Sussman and Rosas blog post—Salingaros and Lavdas tell us:

“The more engaging of the two images will draw the most attention in two distinct ways: the more “relaxing” of the two shows a more uniform VAS coverage, while the more disturbing of the pair reveals more red hot spots.”

Some readers may remember that Salingaros and Lavdas describe a further series of analyses of VAS heatmaps using a software tool called ImageJ. These analyses seek to translate VAS outputs into numerical values using equations which “penalize” a heatmap for having too many discrete red hot spots.

Consider also this 2023 presentation by Brandon Ro which discusses the use of VAS to interpret the images from the NCAS poll. The presentation incorporates the methods introduced by Salingaros and Lavdas while introducing some additional approaches, all in service of arriving at a quantitative Visual Attention Coherence Score for each of the 14 photographs being analyzed.

The work Ro presents was completed with colleague Hunter Huffman and an earlier version of some of their research can be found in a 2022 poster presentation from the 2022 Intermountain Engineering, Technology and Computing Conference.

Upon first viewing, I believed that the presentation in the 2023 video represented further research and analysis of the VAS output already generated for the 2022 poster. A closer comparison reveals that this is not quite the case. Below are images extracted from the poster (left) and the video (right).3

To recap: each column shows the results of the same photographs analyzed with the same software by the same research team. What could explain these differences? Perhaps the algorithms “under the hood” of VAS were updated between 2022 and 2023. Perhaps Ro and Huffman used a different image resolution or different settings in VAS calculations. Perhaps the inclusion of a slim black gutter between images in each pair in the 2023 set influenced how VAS “saw” them. Maybe it’s a combination of some or all of the above.

The back half of Ro’s presentation in the video above outlines some additional steps for analysis regarding Gaze Sequence and some of the other VAS output options. All of these analyses would see their results changed, some in major ways, if they operated on the 2022 output set rather than the 2023 one.

I’ve been paying attention to this realm for about a year now and ultimately I feel no closer to understanding the approaches and decisions underlying this body of work. This is the last post which looks closely at the published work in this field. I have one more piece in the works on the general attitude and approach of eye-tracking research, but for now I will leave the reader with a few lingering questions:

Why do VAS outputs differ so drastically from what Sussman and Rosas captured using the iMotions Online tool?

In what circumstances should we consider VAS to be more (or less) accurate than actual human-subject eye-tracking studies?

Why do Ro and Huffman’s 2023 VAS heatmaps look different from their 2022 ones? Why generate new heatmaps in the first place?

How does one derive a sense of preference from a heatmap? If given heatmap does not “match” the preference indicated by the polling, does that indicate a flaw in the heatmap or a flaw in the poll? And are our answers to those two questions dependent on if the heatmap came from human subjects or VAS?

Minor housekeeping:

I’m hoping to have the final piece of this series wrapped up next week, and then I’ll be moving on to some other projects. The look and feel of this Substack will probably change in accordance with that pivot. Still figuring a lot of stuff out, so stay tuned.

Thanks again for reading and have a beautiful weekend.

—Vitruvius Grind

This is not an argument that appearance doesn’t matter. We all, regardless of individual aesthetic inclinations, want to see buildings with visual charm and character. But we all have other expectations too: our buildings should shelter us from the wind and rain, keep us safe in fires and earthquakes, provide a comfortable interior environment for users, address the functional needs of occupants, respond smartly to a changing climate, address the physical context in which they’re built, etc. A single exterior photograph simply cannot communicate how a building meets these challenges.

See “How accurate are VAS results?” in the VAS Basics category: https://vas.3m.com/faqs

The image set on the left overlays the Gaze Sequence data from VAS on top of the heatmaps. There’s not a quick and elegant way to do this with the screenshots taken from the video, but rest assured that the gaze sequence data results diverge in the two data sets just as the heatmaps do.